keyllm|KeyLLM : Baguio Okt 12, 2023 — In this video, I'm proud to introduce KeyLLM, an extension to KeyBERT for extracting keywords with Large Language Models! We will use the incredible Mistral . Shiny Pokémon have been a staple in Pokémon since Pokémon Gold & Silver and returned once more in Pokémon Sword & Shield. However there are a few tweaks. First, the Shiny rate is the same as it has been at since Pokémon X & Y with it being 1 in 4096 as standard, or if you have the Shiny Charm you obtain for completing the Pokédex, the .

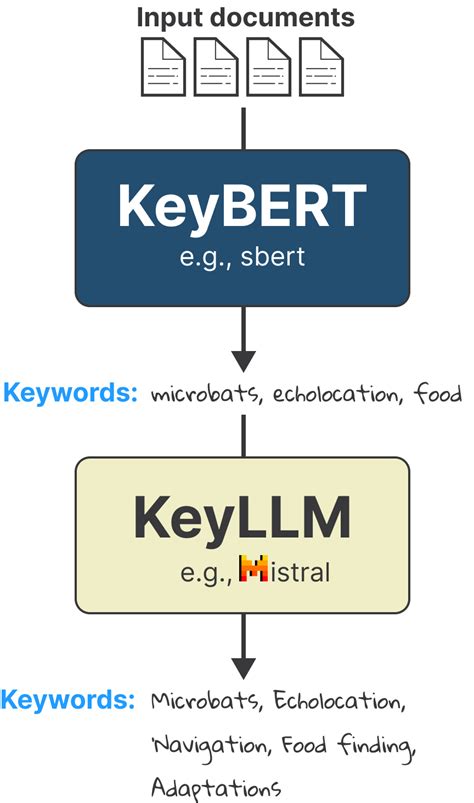

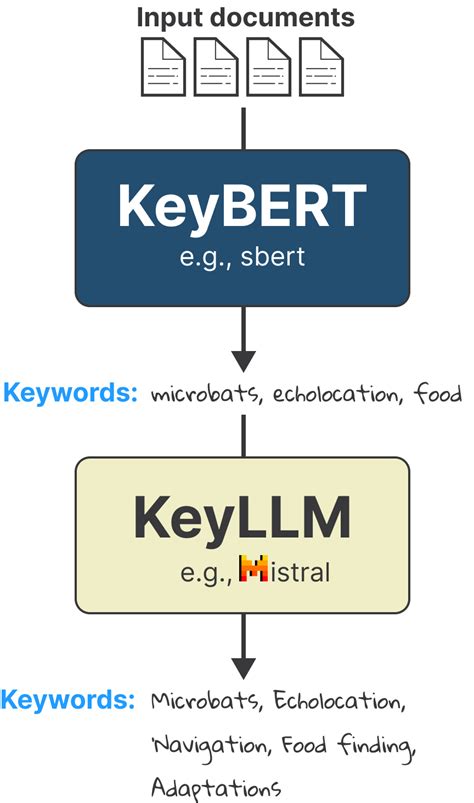

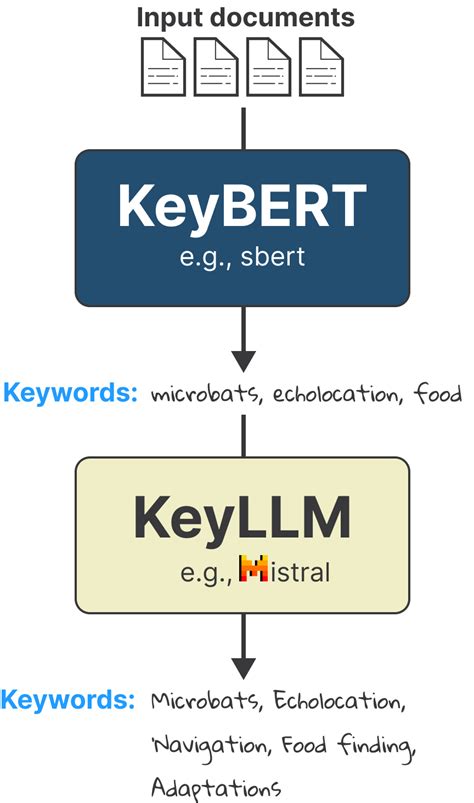

keyllm,KeyLLM is a library that uses Large Language Models (LLM) to create, extract and fine-tune keywords for documents. KeyBERT is a variant of KeyLLM that uses BERT embeddings .Okt 3, 2023 — KeyLLM is an extension to KeyBERT that allows you to use any LLM to extract keywords from text. Learn how to use KeyLLM with Mistral 7B, a powerful and .Okt 12, 2023 — In this video, I'm proud to introduce KeyLLM, an extension to KeyBERT for extracting keywords with Large Language Models! We will use the incredible Mistral .Source code in keybert\_llm.py. extract_keywords(self, docs, check_vocab=False, candidate_keywords=None, threshold=None, embeddings=None) Extract keywords .KeyLLM is a library that allows you to use different large language models (LLMs) to extract keywords from documents. Learn how to install and use OpenAI, Cohere, LiteLLM, .

Peb 5, 2024 — It introduces the use of KeyLLM, enabling the employment of Large Language Models (LLMs) to ascertain the keywords that best encapsulate the essence .KeyBERT. KeyBERT is a minimal and easy-to-use keyword extraction technique that leverages BERT embeddings to create keywords and keyphrases that are most similar to a document. Corresponding medium .

import openai \n from keybert. llm import OpenAI \n from keybert import KeyLLM \n\n # Create your LLM \n openai. api_key = \"sk-.\" \n llm = OpenAI ()\n\n # Load it in .

keyllmKeywords Extraction using KeyLLM and KeyBERT. Contribute to vineet1409/KeyBERT_KEYLLM development by creating an account on GitHub.

Learn how to extract accurate and relevant keywords using KeyLLM and Mistol 7B. Install packages, load the model, and explore advanced techniques for efficient keyword .A note about Homebrew and PyTorch#. The version of LLM packaged for Homebrew currently uses Python 3.12. The PyTorch project do not yet have a stable release of PyTorch for that version of Python.Explore a curated list of open LLMs for commercial use, with links to code, papers, and demos. - eugeneyan/open-llms

Hul 12, 2023 — Large Language Models (LLMs) have shown excellent generalization capabilities that have led to the development of numerous models. These models propose various new architectures, tweaking existing .[Generation(text="\n\nWhat if love neverspeech\n\nWhat if love never ended\n\nWhat if love was only a feeling\n\nI'll never know this love\n\nIt's not a feeling\n\nBut it's what we have for each other\n\nWe just know that love is something strong\n\nAnd we can't help but be happy\n\nWe just feel what love is for us\n\nAnd we love each other with all our .加载模型Prompt工程先看一个简单的例子下面我们看一下关键词抽取的效果输出如下结果:这就是更高级的提示工程的用武之地。 与大多数大型语言模型一样,Mistral 7B需要特定的提示格式,如下图所示:BERT的 [DOCUMENT] 标签表示下面是文档:使用KeyLLM抽取关键词输出如下内容:可以随意使用提示来指定 .Okt 12, 2023 — In this video, I'm proud to introduce KeyLLM, an extension to KeyBERT for extracting keywords with Large Language Models! We will use the incredible Mistral .Mar 31, 2023 — Language is essentially a complex, intricate system of human expressions governed by grammatical rules. It poses a significant challenge to develop capable AI algorithms for comprehending and grasping a language. As a major approach, language modeling has been widely studied for language understanding and generation in the .6. Ollama. Ollama is a tool that allows us to easily access through the terminal LLMs such as Llama 3, Mistral, and Gemma.. Additionally, multiple applications accept an Ollama integration, which makes it an excellent tool for faster and easier access to language models on our local machine.KeyLLM All runnables expose input and output schemas to inspect the inputs and outputs:. input_schema: an input Pydantic model auto-generated from the structure of the Runnable; output_schema: an output Pydantic model auto-generated from the structure of the Runnable; Components . LangChain provides standard, extendable interfaces and .Abr 5, 2024 — Long video question answering is a challenging task that involves recognizing short-term activities and reasoning about their fine-grained relationships. State-of-the-art video Large Language Models (vLLMs) hold promise as a viable solution due to their demonstrated emergent capabilities on new tasks. However, despite being trained on .Ene 10, 2024 — A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company .

This is the replication package for the paper MASTERKEY: Automated Jailbreaking of Large Language Model Chatbots.. Large language models (LLMs), such as chatbots, have made significant strides in various fields but remain vulnerable to jailbreak attacks, which aim to elicit inappropriate responses.keyllm KeyLLM Open In Colab. model (str, required): The deployment to be used. The model corresponds to the deployment name on Azure OpenAI. api_key (str, optional): The API key required for authenticating requests to the model's API endpoint.; api_type: azure; base_url (str, optional): The base URL of the API endpoint. This is the root address where API calls .Ago 5, 2024 — To use the Gemini API, you need an API key. You can create a key with one click in Google AI Studio. Get an API key. Important: Remember to use your API keys securely. Review Keep your API key secure and then check out the API quickstarts to learn language-specific best practices for securing your API key. Verify your API key with a .

You can add your own repository to OpenLLM with custom models. To do so, follow the format in the default OpenLLM model repository with a bentos directory to store custom LLMs. You need to build your Bentos with BentoML and submit them to your model repository.. First, prepare your custom models in a bentos directory following the .Peb 14, 2024 — The NLP and LLM technologies are central to the analysis and generation of human language on a large scale. With their growing prevalence, distinguishing between LLM vs NLP becomes increasingly .Hul 4, 2023 — Vyom Srivastava. Vyom is an enthusiastic full-time coder and also writes at GeekyHumans.With more than 2 years of experience, he has worked on many technologies like Apache Jmeter, Google Puppeteer, Selenium, etc.Abr 18, 2024 — Today, we’re introducing Meta Llama 3, the next generation of our state-of-the-art open source large language model. Llama 3 models will soon be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake, and with support from hardware platforms offered by AMD, AWS, .

keyllm|KeyLLM

PH0 · Use Cases

PH1 · Unlocking the Power of Keyword Extraction with KeyLLM and

PH2 · LLMs

PH3 · KeyLLM

PH4 · Introducing KeyLLM

PH5 · GitHub

PH6 · Enhancing Information Retrieval with KeyBERT: A Leap Beyond